Welcome to Nural's newsletter where you will find a compilation of articles, news and cool companies, all focusing on how AI is being used to tackle global grand challenges.

Our aim is to make sure that you are always up to date with the most important developments in this fast-moving field.

Packed inside we have

- Has Google's LaMDA artificial intelligence really achieved sentience?

- Oregon dropping AI tool used in child abuse cases

- and Jurassic-X: Crossing the Neuro-Symbolic Chasm with the MRKL System - An alternative to large language models

If you would like to support our continued work from £1 then click here!

Marcel Hedman

Key Recent Developments

Has Google's LaMDA artificial intelligence really achieved sentience?

What: Blake Lemoine, an engineer at Google, has claimed that the firm's LaMDA artificial intelligence is sentient, but the expert consensus is that this is not the case. The engineer has been subsequently suspended due to sharing restricted information. A transcript of the conversation shows Lemoine and the system discussing the nature of consciousness.

The claims that the system is sentient are largely unfounded however.

Takeaway: Whether or not this particular system is sentient, this event once again brings up questions of whether sentience can ever be reached. If it can be reached, how would we even recognise it? As large language models become more adept at engaging in human conversations, will we be able to distinguish between simple statistical matching compared to true sentience....

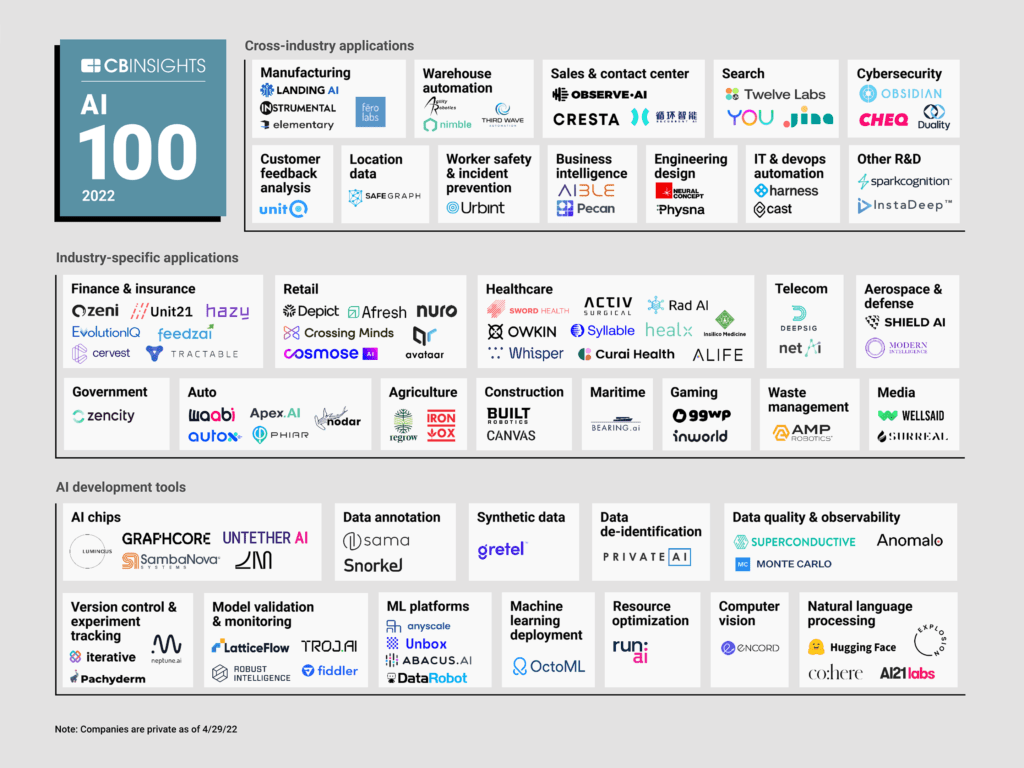

AI 100: The most promising artificial intelligence startups of 2022

What: The AI 100 is CB Insights' annual list of the 100 most promising private AI companies in the world. This year’s winners are working on diverse solutions designed to recycle plastic waste, improve hearing aids, combat toxic online gaming behavior, and more.

Oregon dropping AI tool used in child abuse cases

What: Child welfare officials in Oregon will stop using an algorithm to help decide which families are investigated by social workers, opting instead for a new process that officials say will make better, more racially equitable decisions. This is following the review of a similar model which was demonstrated as flagging a disproportionate number of Black children for “mandatory” neglect investigations.

Key Takeaway: Some decisions are perhaps too important to be left to the hands of an algorithm. Human in the loop approaches often steer those making the final decision down the incorrect course of action. How can we find the role of data driven approaches in some of the most precarious social settings?

AI Ethics & Social Good

🚀 AI speaker clones parents’ voices to read bedtime stories in their stead

🚀 Elon Musk’s regulatory woes mount as U.S. moves closer to recalling Tesla’s self-driving software

🚀 Finding Patterns in Epileptic Seizure Data - predicting seizure onset ahead of time

🚀 What does it mean when an AI fails? A Reply to SlateStarCodex’s riff on Gary Marcus

Other interesting reads

🚀 8 surprising ways how to use Jupyter Notebook -including webapps!

Cool companies found this week

Drones

SkySpecs -SkySpecs is using drones and AI to detect future equipment failures before they grind those giant turbine blades to a halt. The company have recently raised $80m in series D funding.

Climate

FarmWise - Building systems to support farmers to increase operational efficiency. This includes a weed robot named Titan. They have just raised $45m in series B funding.

and Finally... Automated weed picking using machine vision

AI/ML must knows

MRKL systems - MRKL (Modular Reasoning, Knowledge and Language) systems. A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!