Welcome to Nural's newsletter where you will find a compilation of articles, news and cool companies, all focusing on how AI is being used to tackle global grand challenges.

Our aim is to make sure that you are always up to date with the most important developments in this fast-moving field.

Packed inside we have

- Google pushes state of the art with breakthrough PaLM language model

- Facebook to implement end-to-end encryption in support of human rights

- plus, IBM advances "neuromorphic" AI with artificial synapses

If you would like to support our continued work from £1 then click here!

Graham Lane & Marcel Hedman

Key Recent Developments

Google trains a 540B parameter language model with Pathways, achieving ‘breakthrough performance’

What: Google recently unveiled Pathways, a novel method of training large AI models in a highly efficient manner. A Google research team used this new approach to train a huge 540 billion parameter language model using 780 billion tokens of high-quality, curated text.

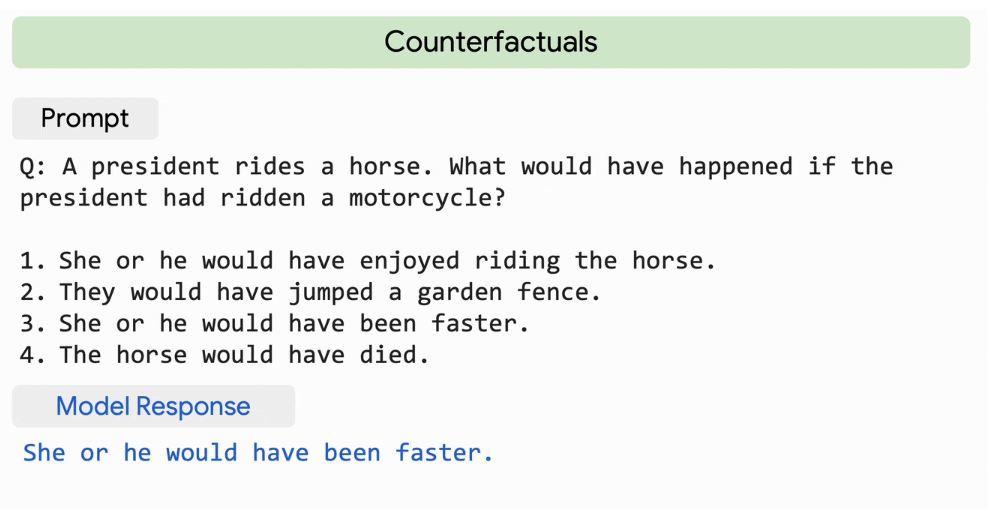

The model was evaluated across hundreds of natural language, code, and mathematical reasoning tasks, and achieved state-of-the-art results on the vast majority of these benchmarks, typically by significant margins. The researchers also claim breakthrough performance outperforming average human performance on a new benchmark of particularly difficult tasks. In this task, the AI model continued to improve steeply as the number of parameters was increased. The researchers also carried out a detailed study of bias and toxicity in the model.

Key Takeaways: Large language models have been criticised for unsustainable resource usage and concerns that performance may not improve with scaling. However, this research demonstrates improved training efficiency while also concluding that “performance improvements from scale have not yet plateaued”.

Blog: Pathways Language Model (PaLM): Scaling to 540 billion parameters for breakthrough performance

Meta tries to break the end-to-end encryption deadlock

What: Facebook commissioned an independent report about end-to-end encryption from Business for Social Responsibility (BSR). The report took more than 2 years to complete. BSR found that end-to-end encryption is overwhelmingly positive and crucial for protecting human rights. The report also considered criminal activity and violent extremism, and recommended possible mitigations. These include safe and responsive reporting channels for users and analysis of unencrypted metadata to catch potentially problematic activity without direct communication scanning or access.

Key Takeaways: Meta-owned Whatsapp already implements end-to-end encryption whereas its other services do not. Meta has, however, recently implemented end-to-end encryption for Instagram messaging in Ukraine and Russia in response to Russia's invasion of Ukraine. There is a long-standing tension between the requirements of law enforcement and the protection of privacy rights. Based on this report, Meta is planning end-to-end encryption for all its services by 2023. A spokesperson said “… you don’t need to choose between privacy and safety, you can have both …”.

Blog: Independent assessment: Expanding end-to-end encryption protects fundamental human rights

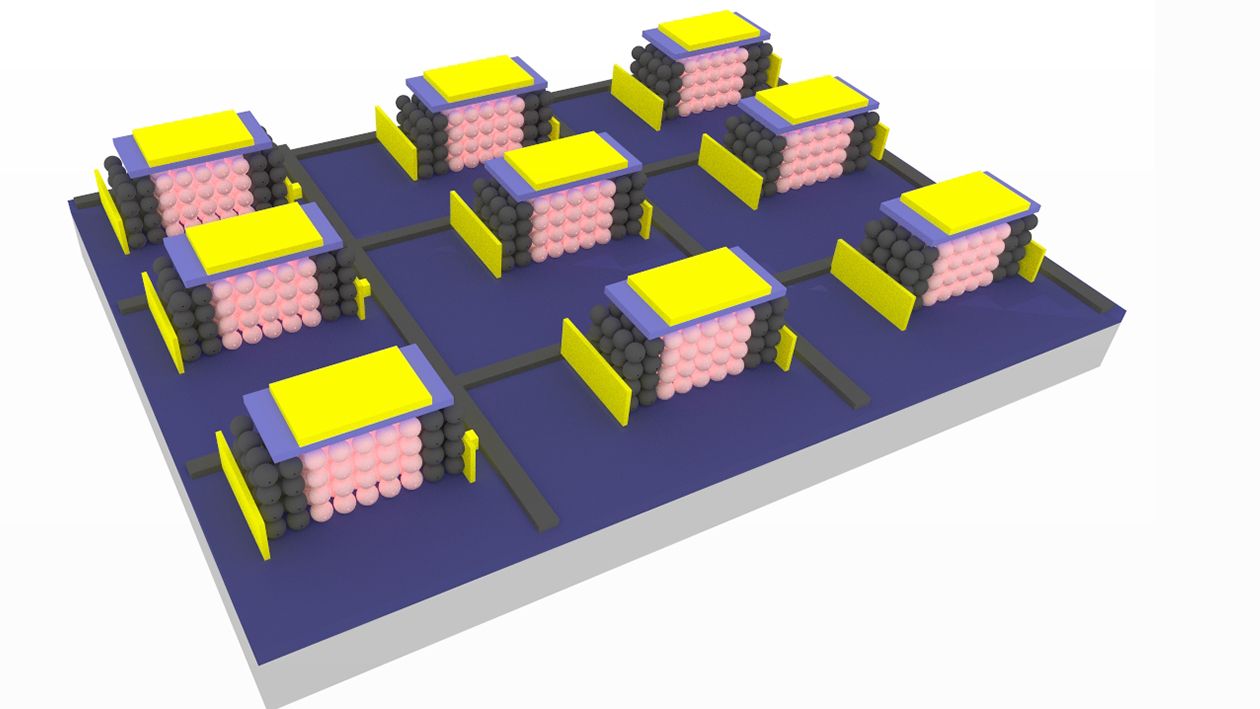

IBM develops new artificial "memtransistive" synapse

What: A human brain would have no difficulty recognising a playful kitten running and hiding, but this would be very difficult for current AI. The IBM research lab has addressed this problem of processing data streams that are both continuous and sequential by designing AI hardware that more closely resembles the human brain. This involves creating a new form of artificial synapse with greater time-related plasticity. Current AI emulates the biological synapses by “weighting” in the AI model but with the major drawback that this can only be changed by retraining. This proposed, new non-von Neumann AI hardware could help create machines to recognize objects more like humans do.

Key Takeaways: The results of the current research are “more exploratory than actual system-level demonstrations” but are pushing the boundaries of “neuromorphic” computing.

Paper: Phase-change memtransistive synapses for mixed-plasticity neural computations

AI Ethics

🚀 The medical algorithmic audit

A detailed proposal for a medical algorithmic audit framework.

🚀 AI-influenced weapons need better regulation

The weapons are error-prone and could hit the wrong targets.

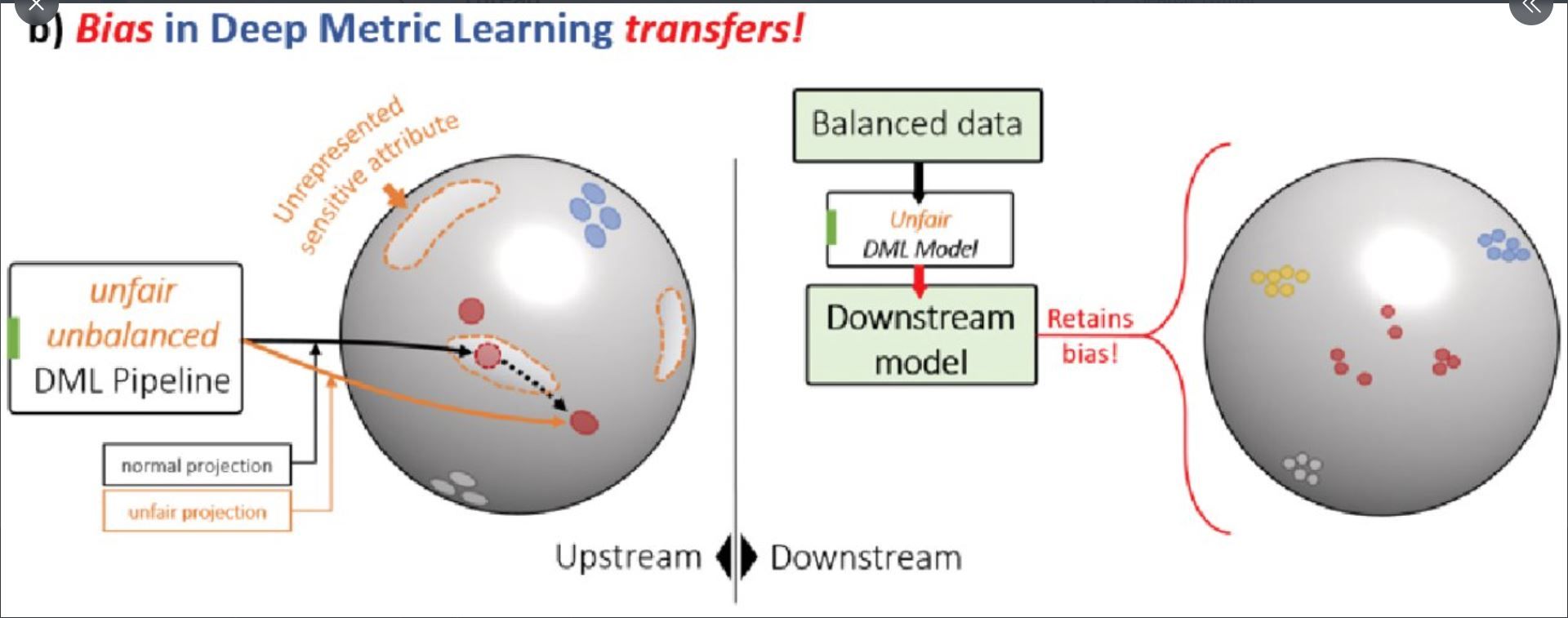

🚀 Bias in representations from deep learning is propagated even when training data in the downstream task is re-balanced

Other interesting reads

🚀 On scientific understanding with AI

Many scientists are wary of relying on ML models in the absence of a detailed understanding of how the results were achieved. But what does "scientific understanding" really entail? This paper discusses.

🚀 Orlando Economic Partnership plans first regional digital twin

The planned digital twin of Orlando, Florida will allow "utility providers, real estate developers, city planners and airport authorities to work off a common visual representation".

🚀 ‘A ghost is driving the car’ — my peaceful and productive experience in a Waymo self-driving van

A detailed account of a passenger ride in a Waymo vehicle without a safety driver. This comes at the time that Waymo has announced plans to expand driverless rides to San Francisco.

Cool companies found this week

Natural Language Processing

Inflection - is "an AI-first company, redefining human-computer interaction". It is currently venture funded in an “incubation” stage. The company has a remarkable founding team consisting of Reid Hoffman, the billionaire founder of LinkedIn, a co-founder of DeepMind and a high-profile DeepMind researcher. It has apparently also been successful in recruiting additional top talent from Meta AI, DeepMind and Google.

Lilt - offers translation technology and services through a human-in-the-loop AI approach that creates machine learning models from both human and machine intelligence. The company has raised $55 million in round C funding.

No-code

Thunkable – is the latest in a series of startups developing no-code platforms, in this case developing native mobile apps. The platform offers users the possibility to create an app and publish it directly to app marketplaces [...and what could possibly go wrong with that?]. The company has raised $30 million in round B funding.

And Finally ...

AI/ML must knows

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Deep Learning - Deep learning is a form of machine learning based on artificial neural networks.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!