Welcome to Nural's newsletter focusing on how AI is being used to tackle global grand challenges.

Packed inside we have

- Meta AI predicts shape of 600 million proteins

- Nvidia’s eDiffi is an impressive alternative to DALL-E 2 or Stable Diffusion

- and OpenAI Converge: A five-week program for founders building transformative AI companies

If you would like to support our continued work from £1 then click here!

Marcel Hedman

Key Recent Developments

AlphaFold’s new rival? Meta AI predicts shape of 600 million proteins

What: "Researchers at Meta have used AI to predict the structures of some 600 million proteins from bacteria, viruses and other microorganisms that haven’t been characterized.

The scientists generated the predictions using a ‘large language model’. Normally, language models are trained on large volumes of text. To apply them to proteins, the team instead fed the AI sequences of known proteins, which can be written down as a series of letters, each representing one of 20 possible amino acids. The network then learnt to fill in the sequences of proteins in which some of the amino acids were obscured."

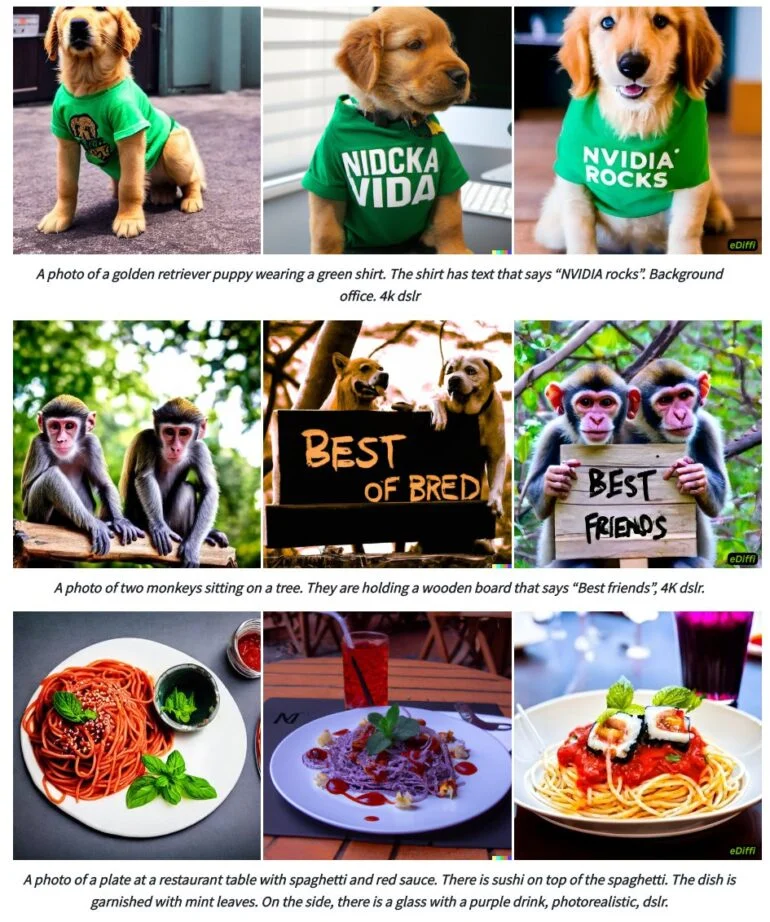

Nvidia’s eDiffi is an impressive alternative to DALL-E 2 or Stable Diffusion

What: Another text to image generator has arisen, this time from Nvidia and is named eDiffi. According to Nvidia, eDiffi achieves better results than DALL-E 2 or Stable Diffusion by using various expert denoisers. For example, eDiffi is better at generating text in images and better adheres to the content specifications of the original text prompt in the examples shown by Nvidia.

RHS column are the eDiffi images compared to stable diffusion & Dall-E

Google's research arm on Wednesday showed off an assortment of artificial intelligence (AI) projects it's incubating

https://www.axios.com/2022/11/03/google-artificial-intelligence

Find an overview of AI projects being incubated at Google which covers everything from mitigating climate change to helping novelists craft prose. See below for the social good focus:

On the "social good" side:

- Wildfire tracking: Google's machine-learning model for early detection is live in the U.S., Canada, Mexico and parts of Australia.

- Flood forecasting: A system that sent 115 million flood alerts to 23 million people in India and Bangladesh last year has since expanded to 18 additional countries (15 in Africa, plus Brazil, Colombia and Sri Lanka).

- Maternal health/ultrasound AI: Using an Android app and a portable ultrasound monitor, nurses and midwives in the U.S. and Zambia are testing a system that assesses a fetus' gestational age and position in the womb.

- Preventing blindness: Google's Automated Retinal Disease Assessment (ARDA) uses AI to help health care workers detect diabetic retinopathy. More than 150,000 patients have been screened by taking a picture of their eyes on their smartphone.

- The "1,000 Languages Initiative": Google is building an AI model that will work with the world's 1,000 most-spoken languages.

AI Ethics & 4 good

🚀 A teacher allows AI tools in exams – here’s what he learned

🚀 Welp, There Goes Twitter's Ethical AI Team, Among Others as Employees Post Final Messages

🚀 Artificial intelligence and molecule machine join forces to generalize automated chemistry

🚀 Satellite Image Deep Learning Newsletter

Other interesting reads

🚀 Google Pays $100M for AI Avatar Startup Alter

🚀 Lockheed Martin Successfully Completes First Autonomous Black Hawk Helicopter Flight

🚀 Best NLP Papers — October 2022

🚀 Large language models are not zero-shot communicators

🚀 OpenAI Converge: A five-week program for founders building transformative AI companies

Cool companies found this week

Climate

AiDash - Making utilities, energy, and other core industries resilient, efficient, and sustainable with satellites and AI. They recently announced announced a strategic investment of $10 million from SE Ventures, a Silicon Valley-based global venture fund

and finally...

AI/ML must knows

Foundation Models - any model trained on broad data at scale that can be fine-tuned to a wide range of downstream tasks. Examples include BERT and GPT-3. (See also Transfer Learning)

Few shot learning - Supervised learning using only a small dataset to master the task.

Transfer Learning - Reusing parts or all of a model designed for one task on a new task with the aim of reducing training time and improving performance.

Generative adversarial network - Generative models that create new data instances that resemble your training data. They can be used to generate fake images.

Deep Learning - Deep learning is a form of machine learning based on artificial neural networks.

Best,

Marcel Hedman

Nural Research Founder

www.nural.cc

If this has been interesting, share it with a friend who will find it equally valuable. If you are not already a subscriber, then subscribe here.

If you are enjoying this content and would like to support the work financially then you can amend your plan here from £1/month!